How the new LLMs hosted on Atlassian work: a guide for companies seeking secure AI

February 9, 2026

A couple of weeks ago, Atlassian announced its new offering of LLMs hosted directly on its Cloud platform, known as Atlassian-hosted LLMs. It was already introduced during Team’25 Europe, but now it’s finally in general availability.

This means that, instead of always relying on third‑party models (such as OpenAI or Meta), Atlassian can offer language models hosted and managed within their own cloud infrastructure.

Key points of the announcement

There are several strategic and practical reasons why this is significant:

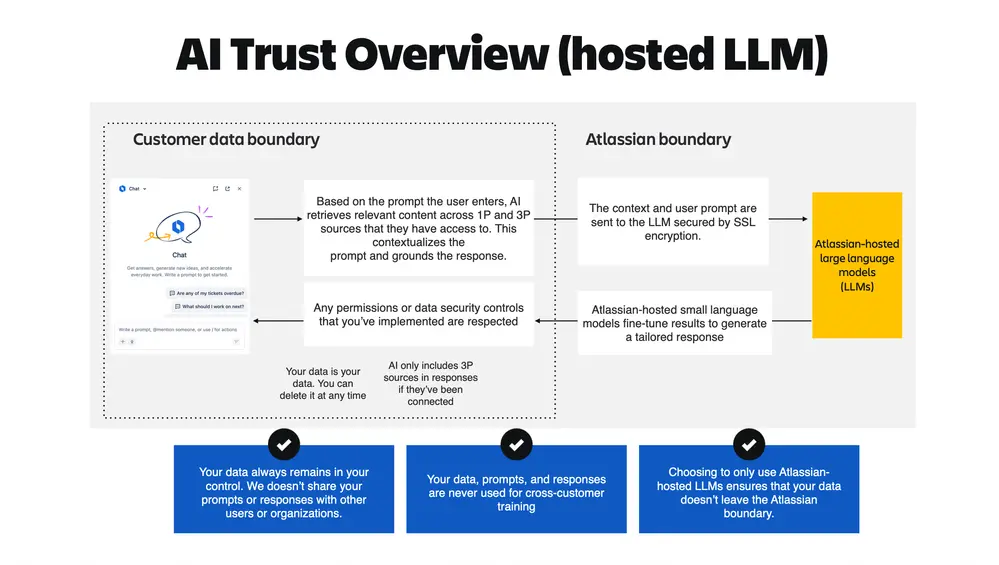

Security and data control

By having LLMs hosted within its own cloud, Atlassian reduces its dependence on third parties for language processing, which keeps customer information more secure within its own cloud boundaries.

Deeply integrated AI features

Hosted LLMs allow AI features to be more deeply integrated into ecosystem applications such as Jira, Confluence, Jira Service Management, etc.

Optimized performance

By controlling the infrastructure and the models, Atlassian can optimize the performance of the LLMs for its own use cases and choose the most appropriate models for each type of task.

Risk & dependence reduction

Although they still use some models from external providers, having their own hosted set reduces dependence on, and risks associated with, external vendors (such as changes in licensing, availability or usage policies).

This announcement reflects a natural evolution of their existing Intelligence engine (“Rovo AI”), which combines LLMs with real work data from the Atlassian platform to deliver smarter experiences in collaboration and automation.

Rovo has been designed from the ground up to respect user permissions, following responsible technology principles. In addition to Rovo’s own governance, we now add LLMs hosted directly in Atlassian.

How Atlassian-hosted LLMs work

First of all, it’s important to know that this Atlassian-hosted LLM option is only available for customers on an Enterprise plan.

That’s why they’re an ideal option for organizations concerned about security and compliance, with a high volume of users and environments. They are especially useful for teams in regulated industries or teams with strict internal data management policies that want the benefits of AI without increased data exposure or additional risk from vendors. By keeping inference within Atlassian’s cloud trust boundary, these teams get enterprise‑grade safeguards while enabling modern AI use cases in Jira, Confluence and Rovo.

Once your organization enrolls, supported AI features switch to models hosted entirely on the Atlassian cloud platform. Both your prompts and the context will not leave Atlassian’s trust boundary for model processing.

What’s next?

To start using Atlassian-hosted LLMs, organization administrators must agree to participate in the program and request it by opening a ticket to Atlassian (remember, only if you are an Enterprise customer).

Once validated, Atlassian-hosted LLMs will be enabled for all of the organization’s sites. If you have any questions, you can refer toAtlassian's user guide.

A strategic step

The addition of LLMs hosted directly by Atlassian marks an important step towards AI for enterprises that is even more secure, integrated and optimized. By offering models that operate within its own cloud environment, Atlassian is positioning itself to:

- Improve data security and compliance

- Offer deeper and more contextual AI experiences

- Reduce risks associated with external providers

- Drive productivity and automation across all teams using its applications.

This advancement is not only technical, but also strategic: it brings generative AI closer to the heart of how organizations collaborate and work every day.

Would you like to evaluate whether Atlassian-hosted LLMs fit your organization's reality?** At Sngular, we can help you analyze your environment, compliance requirements, and use cases. Let's talk

Our latest news

Interested in learning more about how we are constantly adapting to the new digital frontier?

Insight

February 2, 2026

Atlassian announces the Jira Seasonal Releases cycle

Insight

January 26, 2026

How to automate your meetings with Atlassian Loom: templates, triggers, and smart values

Insight

January 19, 2026

Level-up on the user experience for Atlassian administrators

Insight

January 12, 2026

Complete guide to privacy and permissions in Atlassian Rovo: data and agent security