Why Speak to LLMs in English? The Technical Reality Behind AI's Most Repeated Advice

September 29, 2025

The Advice We've All Heard

"If you want better results, speak to it in English." This is the unofficial advice circulating in every AI forum, every ChatGPT tutorial, and every conversation among developers. We've normalized it so much that many Spanish-speaking professionals have adopted English as their default language for interacting with language models, even when they intend to generate content in Spanish.

But where does this recommendation really come from? Is it just an urban myth in the tech community, or are there real technical reasons behind it? And more importantly: what is the true cost of following this advice?

The Anatomy of the Problem: What are Tokenizers?

The Invisible Process That Determines Everything

To understand why Spanish has technical disadvantages in LLMs, we need to delve into one of the most critical and least understood components of these systems: tokenizers. If LLMs are the brain of AI, tokenizers are the nervous system that converts our words into impulses the model can process.

Imagine an LLM is like an extraordinary chef, but one who can only cook with ingredients that come in specific pre-cut portions. The tokenizer is the sous chef who must cut all the ingredients (our text) into those exact portions before the main chef can work. The way these ingredients are cut will determine the final quality of the dish.

How an LLM Actually "Reads" Your Text

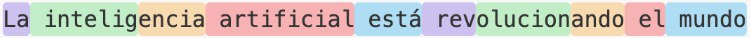

When you write "La inteligencia artificial está revolucionando el mundo" (Artificial intelligence is revolutionizing the world), here's what happens under the hood:

Step 1: Tokenization

Original text: "La inteligencia artificial está revolucionando el mundo"

Tokens in GPT-4:

10 tokens total

10 tokens total

For comparison, the same idea in English:

Original text: "Artificial intelligence is revolutionizing the world"

Tokens in GPT-4:

8 tokens total

8 tokens total

Do you notice the difference? The Spanish phrase requires 25% more tokens to express the same idea. And this is just a simple example.

The Historical Bias That Affects Us Today

The tokenizers of the most popular LLMs were designed using a technique called Byte Pair Encoding (BPE), trained primarily on English text corpora. This process identifies the most frequent character combinations and converts them into unique tokens.

The fundamental problem: Since English dominated the training data, the most common combinations are inherently Anglocentric:

- "ing" → single token (appears constantly in English)

- "tion" → single token (super common in English)

- "ando" → divided into multiple tokens (less frequent in the data)

- "ción" → divided into multiple tokens (Spanish underrepresented)

A Revealing Example: Technical Words

Let's see what happens with technical vocabulary, especially relevant for professionals:

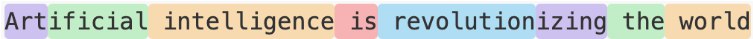

Word: "desarrollador" (developer)

GPT-4 Tokenization:

4 tokens

4 tokens

Word: "developer"

GPT-4 Tokenization:

1 token

1 token

Result: The Spanish word needs 4x more tokens than its English equivalent. This is not a coincidence; it's a direct result of the bias in the tokenizer's training data.

Beyond Individual Words: The Compound Effect

The problem amplifies when we consider complete texts. Let's analyze a typical technical paragraph:

Text in Spanish (44 words):

"Nuestro equipo de desarrolladores está implementando una arquitectura de microservicios basada en contenedores para mejorar la escalabilidad y mantenibilidad del sistema. La infraestructura utiliza tecnologías de orquestación avanzadas que permiten el despliegue automatizado y la monitorización en tiempo real de todos los componentes distribuidos."

Tokenization (GPT-4o): 62 tokens

Equivalent in English (32 words):

"Our development team is implementing a container-based microservices architecture to improve system scalability and maintainability. The infrastructure uses advanced orchestration technologies that enable automated deployment and real-time monitoring of all distributed components."

Tokenization (GPT-4o): 39 tokens

Real Difference: The Spanish text requires 58.9% more tokens to express the same technical concept.

The Numbers That Matter: Four Real Impacts of Fragmentation

Why This Matters More Than You Think

We've seen how "desarrollador" becomes four fragments while "developer" remains whole, and how a technical paragraph in Spanish needs 58.9% more tokens. But these numbers are not just academic curiosities. The fragmentation of Spanish has four direct and measurable impacts on your real experience with LLMs.

1. Cost per Interaction: Every Fragmented Token Comes Out of Your Pocket

The most immediate reality is economic: you pay for every token processed, and Spanish systematically generates more tokens to express the same ideas.

Let's take the real example of the microservices paragraph we analyzed earlier. In Spanish, it consumes 62 tokens; in English, just 39. If you use GPT-4 at $30 per million tokens, each time you generate that technical explanation, you're paying $0.00186 in Spanish versus $0.00117 in English. A difference of $0.00069 per query.

Does it seem insignificant? Multiply by 10,000 monthly queries typical of a medium-sized application, and that difference becomes $6.90 monthly, or $82.80 annually. You're not going broke, but you're paying a 58.9% tax for using your native language.

The extreme example we saw was with "desarrollador" (4 tokens) versus "developer" (1 token). If your application frequently generates technical terminology, you're paying four times more for words that should cost the same.

2. Context Limit: Losing Valuable Mental Space

LLMs have a limited "attention window": they can only process a certain amount of tokens simultaneously. GPT-4 can handle up to 128,000 tokens of context, but every wasted Spanish token is space you can't use for useful information.

Let's go back to the example of "desarrollador" fragmented into 4 tokens. In a typical technical document with 50 similar specialized terms:

- Spanish version: 50 terms × 4 average tokens = 200 tokens just in vocabulary

- English version: 50 terms × 1 average token = 50 tokens

Result: 150 wasted tokens that could have been used for more context, more examples, or more detailed instructions.

If you're working near the context limit of a model (say, 4,000 tokens for a practical case), the Spanish version can effectively process 3,800 tokens of real content, while the English version utilizes 3,950 tokens. You lose 150 tokens of useful capacity, which represents 3.8% less processable information for the same context limit.

3. Processing Speed: More Tokens Equal More Waiting Time

Every additional token requires additional computation. It's not a perfectly linear relationship, but there is a clear correlation: more tokens = more processing time.

Using our example of the technical paragraph (62 tokens Spanish vs 39 English), if an English query is processed in 800 milliseconds, the Spanish version will need approximately:

800ms × (62 ÷ 39) = 1,272ms

A difference of 472 milliseconds that users perceive as slowness. In an interactive conversation, this additional latency breaks the natural flow of dialogue.

Scale this to an application that processes 100 queries per hour for 16 hours a day (1,600 queries), and you're investing 12.6 additional minutes daily just in processing the inefficiency of the Spanish tokenizer.

4. Quality of Attention: The Model Has to Work Harder to Understand You

This is perhaps the most subtle but important impact. When "desarrollador" is fragmented into \["desarr", "oll", "ador"\], the model must reconstruct the full meaning from fragments that individually can have multiple interpretations.

Think of it as a conversation where every technical word reaches you divided into separate syllables, with noise in between. Your brain has to work extra to reconstruct the meaning. LLMs face the same challenge: they must dedicate additional attention resources to understand what you really meant.

The practical consequence is that the model has less "mental capacity" available for the actual task. Instead of concentrating completely on generating the best response, it must dedicate part of its attention to solving linguistic puzzles caused by fragmentation.

This loss of attention quality can manifest in less precise responses, less profound reasoning, or simply a feeling that the model "doesn't understand you as well" in Spanish.

The Compound Effect: When All Four Impacts Combine

The most problematic aspect is not any of these factors separately, but how they amplify each other. You pay more for less efficient tokens, which consume more context space, require more processing time, while the model struggles to maintain the same quality of attention.

The result is a systemic degradation of the experience that goes beyond simple economic cost. Your interaction with the LLM becomes objectively less efficient in Spanish, not due to limitations of the language itself, but due to technical decisions made during the development of these systems.

Does This Mean You Should Abandon Spanish?

Not necessarily. These four impacts are measurable technical facts, but the decision of which language to use must include factors that go beyond pure efficiency: the naturalness of the response for your audience, the cultural accuracy of the content, and the value of maintaining your linguistic identity.

The key is to understand the real costs to make informed decisions, not to accept generic advice about using English "because it's better." Now you know exactly why English can be more efficient, and you can decide if that efficiency is worth more than the benefits of operating in your native language.

Our latest news

Interested in learning more about how we are constantly adapting to the new digital frontier?

Insight

February 9, 2026

How the new LLMs hosted on Atlassian work: a guide for companies seeking secure AI

Insight

December 26, 2025

Ferrovial improves heavy construction efficiency with ConnectedWorks

Event

November 24, 2025

White Mirror 2025: the blank mirror reflecting what is yet to come